Deployment Automation

Continuous integration is an enormous step forward in productivity and quality for every project that adopts it. CI ensures that teams working together to create large and complex systems can do so with a higher level of confidence and control than is achievable without it. It also ensures that the code we deliver as a team always works. It does so by providing us with rapid feedback on any problems that we introduces as we make changes to the code.

However, continuous integration is not enough. CI mainly focuses on development teams - not the operations teams. DevOps is not just about developers, it is also about operations - meaning it must cover the whole path from coding to management of deployed software.

Most of the waste in releasing software stems from slow deployment testing and releasing cycle. It takes so long to get the software into a production-like environment, that the resulting deployment is buggy, non-reproducible and difficult to deploy to production with full confidence that it will work.

Deployment automation deals with automating this deployment process for the product to such a degree that this process can be executed by triggering a pipeline that does the rest.

End To End Approach

The solution to long delivery cycles is to adopt a more holistic, end-to-end approach to delivering software. When we make it easy to deploy the application to testing environments, it creates a powerful feedback loop where the team gets rapid feedback on both the code and the deployment process.

When you implement an automated process, it will get run more often and therefore verified. Manual processes are difficult to keep up to date as the system evolves. Everything about your deployment must be automated.

The input to the deployment is always a particular version of your software. You run the same jobs of build and test on the main trunk as you do in the merge request, the difference is that the trunk is now used as the source of the build and it is this version that gets deployed as well (after passing all the tests of course).

One consequence of applying this pattern is that you are are effectively prevented from releasing into production builds that are not thoroughly tested and found to be fit for release. Regression bugs are avoided, deployment problems are identified quickly and fixed. The whole process is version controlled and so all changes to it are tracked over time.

Multi-stage deployment

A good deployment pipeline captures the manual actions that you would normally do before deploying software to production.

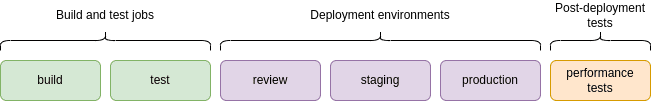

The pipeline is divided into stages.

- Build and test: the usual build and test stages verify that the software works at the technical level. The software compiles, passes a suite of tests (mostly unit and integration tests) and passes code analysis.

- Automated acceptance tests: this stage verifies that the system works on functional and non-functional levels. This checks that the behavior of the system meets the needs of its users and that the system meets its specifications.

- Manual testing: at this stage the system is tested manually to find bugs that may have been missed by automated tests. This stage includes exploratory testing and manual user acceptance testing.

- Production deployment: the software is deployed to production. This stage automates this process.

- Post-deployment testing: after deployment you can run performance test and other non-destructive tests that measure efficiency of the system when it runs in production.

Tools

- Docker: this helps with deployments that involve multiple software packages and services that must be deployed at the same time. It also helps with automating deployment of your build environment itself.

- Ansible: this helps with deployments that involve running commands on remote systems. Ansible allows you to make configuration changes to many machines at the same time over ssh.

Best practices

- Build binaries once: do not compile code repeatedly in different contexts. When you run your end to end tests - do so with the production binary. Build all your binaries once and then reuse these artifacts in subsequent pipelines as opposed to building them again. Most importantly, you want to deploy the same executables that you have tested. Even small differences in build environment can sometimes introduce faults that would be missed unless the binary is tested before deployment.

- Docker: use docker for packaging and deploying web services. Usually such applications involve many configuration files and many services that must be configured and started in sequence. A docker image automates this process and ensures that you can deploy the whole application at once and do so reliably.

- Deploy the same way: use the same process to deploy to every environment - whether development, review or staging - or to production. This thoroughly tests your deployment process ensuring that it has less chance to fail when you finally deploy to production.

- Smoke test: when you make a deployment, run small test to make sure that it is up and running. Even docker images that you build can be smoke tested by running tests inside the docker image before pushing the image to a registry.

- Deploy to copy of production: make sure that your testing and staging environments do not differ from production environment.

- Avoid nightly builds: make each change propagate through the entire

pipeline instantly. If some tests take longer, run them as part of a

merge resultpipeline but still always run them before changes are merged. You can still use nightly builds for other purposes, but not for the purpose of deferring tests because it breaks continuous delivery and increases chances of broken code ending up in production.

Get a focused reply on your firmware challenge

Share your target hardware, OS, and current blocker — you'll get a tailored response within 1–2 business days with concrete next steps.

Fastest path: LinkedIn

Message on LinkedIn with your details, or use the form below.

Want an overview of services? Visit the firmware page.